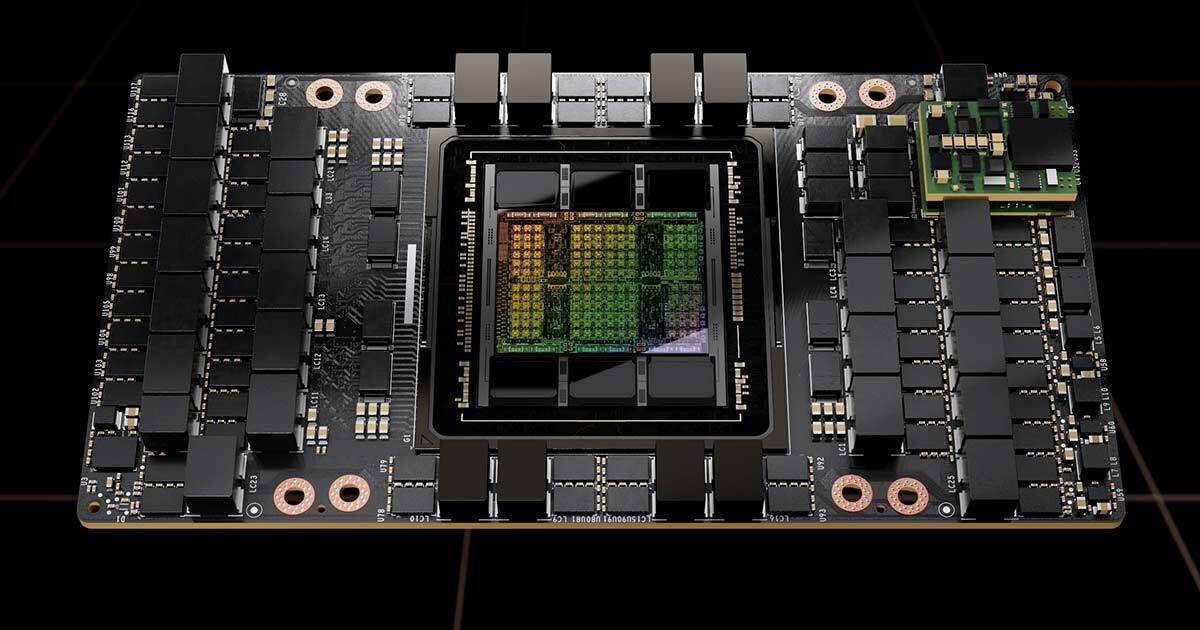

NVIDIA looks to be preparing a couple of new H100 Hopper GPUs with new memory, with a 96GB and 64GB H100 Hopper GPU spotted on the PCI Device ID list.

The new SXM5-based models of the NVIDIA H100 Hopper datacenter-focused GPUs would have 96GB of HBM3 memory, and 64GB of HBM3 memory if the listings are correct. 94GB of memory is a weird number for NVIDIA to come to, but it's not the first... as the company has the NVIDIA H100 NVL (for Large Language Models) with 94GB of HBM.

Why 96GB? Well, we most likely have the full 120GB of HBM3 on the H100 Hopper GPU but 24GB of it is disabled through a particular layer in the stack, versus the whole stack. We have seen rumours previously of a 120GB memory version of the H100 Hopper GPU... so we should expect to see more SKUs of the NVIDIA H100 Hopper GPU in the future.