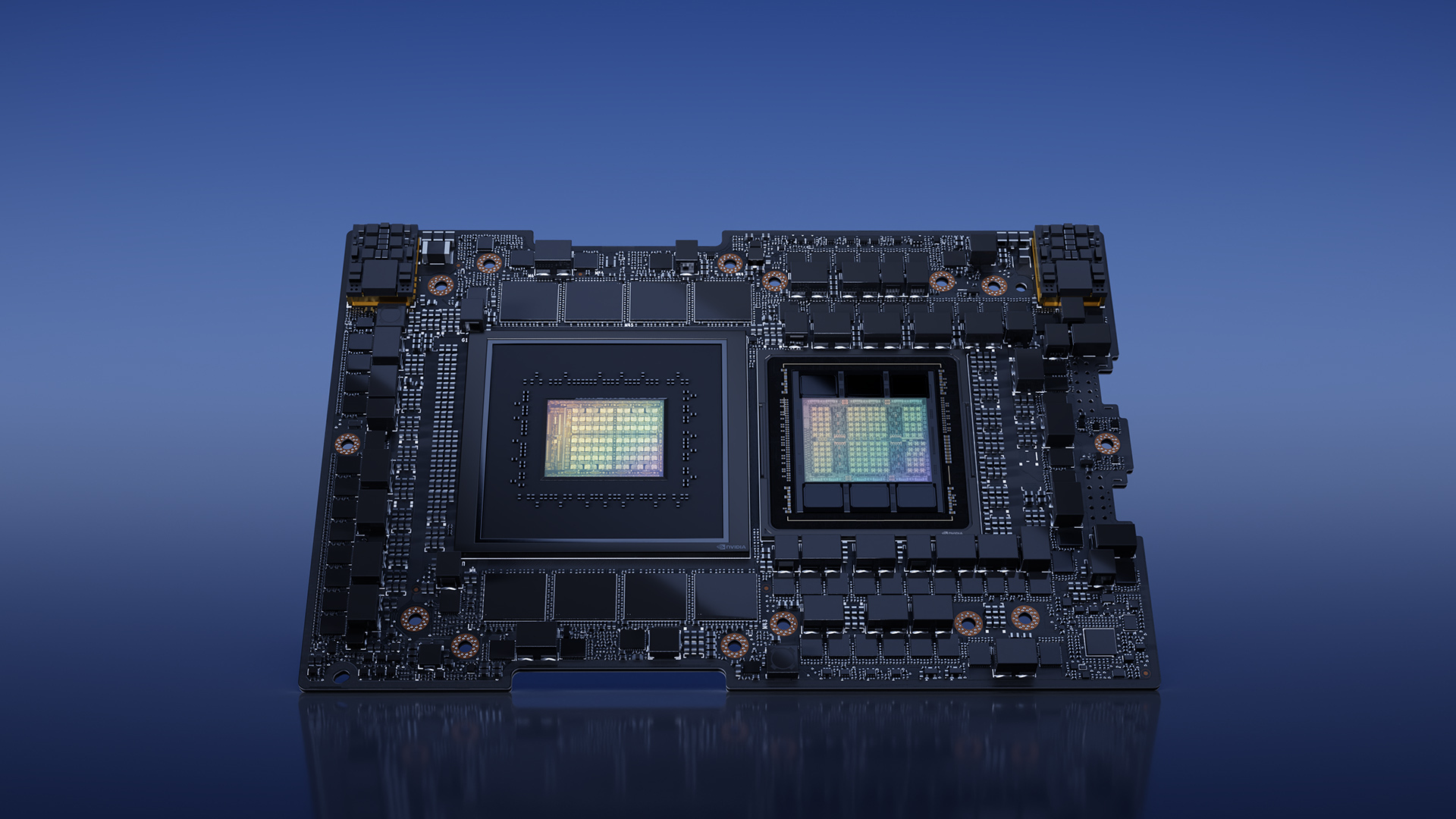

NVIDIA has just unveiled its next-gen GH200 GPU with the introduction of the GH200 Grace Hopper family, the first GPU in the world rocking next-gen HBM3e memory.

NVIDIA's new GH200 GPU is a monster in itself, with a dual GH200 Grace Hopper system featuring 3x higher memory bandwidth than its predecessor, with a Grace Hopper system seeing configurations with up to 282GB of ultra-fast HBM3e memory. HBM3e memory offers a 50% speed increase over the already-fast HBM3 memory standard, but HBM3e really stretches its legs with up to 5TB/sec of memory bandwidth per GPU meaning a dual Grace Hopper system offers 10TB/sec of memory bandwidth.

To put that into Gaming PC terms, NVIDIA's flagship gaming GPU -- the GeForce RTX 4090 -- has 1TB/sec of memory bandwidth.

NVIDIA has 400+ new Grace Hopper systems with HBM3e memory, with a combination of NVIDIA's very latest CPUs, GPUs, and DPU architectures including Grace, Hopper, Ada Lovelace, and Bluefield. The company is continuing to dominate the AI industry right now which we all know is expanding faster than anyone would've thought, largely driven by the mega-success of ChatGPT. NVIDIA can't make GPUs fast enough, so the new GH200 is going to mean huge upgrades for AI and HPC firms.

NVIDIA explains GH200 Grace Hopper platform: "NVIDIA today announced the next-generation NVIDIA GH200 Grace Hopper platform — based on a new Grace Hopper Superchip with the world’s first HBM3e processor — built for the era of accelerated computing and generative AI. Created to handle the world’s most complex generative AI workloads, spanning large language models, recommender systems and vector databases, the new platform will be available in a wide range of configurations.

The dual configuration — which delivers up to 3.5x more memory capacity and 3x more bandwidth than the current generation offering — comprises a single server with 144 Arm Neoverse cores, eight petaflops of AI performance and 282GB of the latest HBM3e memory technology. HBM3e memory, which is 50% faster than current HBM3, delivers a total of 10TB/sec of combined bandwidth, allowing the new platform to run models 3.5x larger than the previous version, while improving performance with 3x faster memory bandwidth"